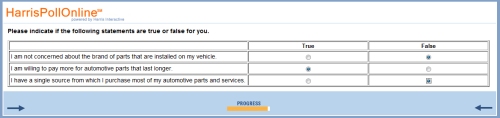

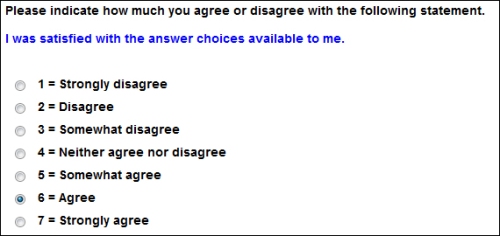

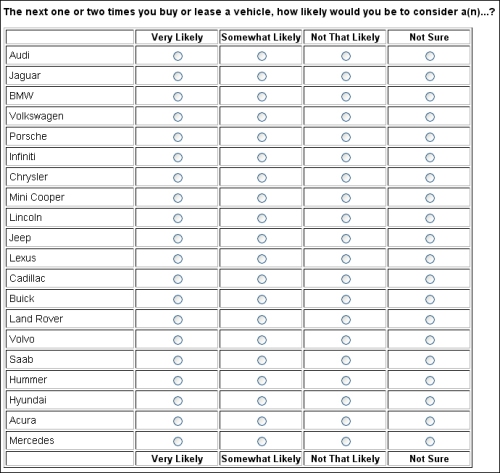

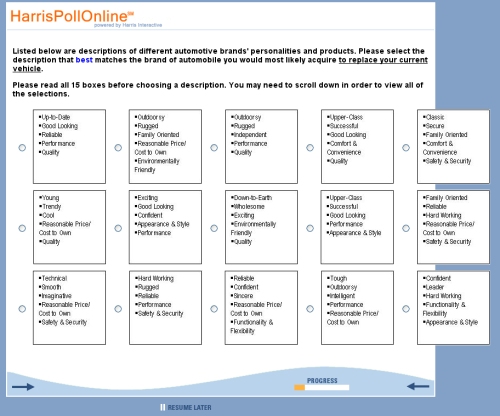

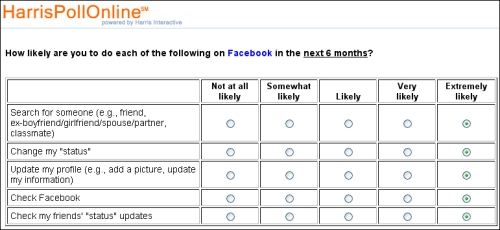

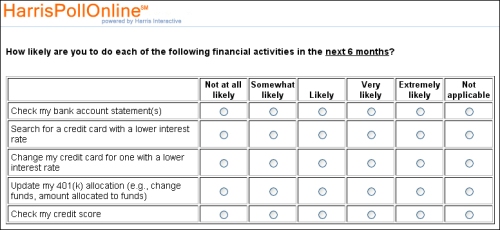

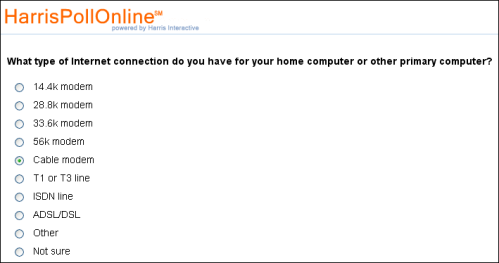

Harris often asks respondents to click a radio button at the end of the survey, asking something along the lines of “How much do you agree with this statement: I noticed mistakes in this survey,” with answer choices ranging from strongly agree to strongly disagree. (I keep neglecting to get a screencap of that, but it’s along those general lines.)

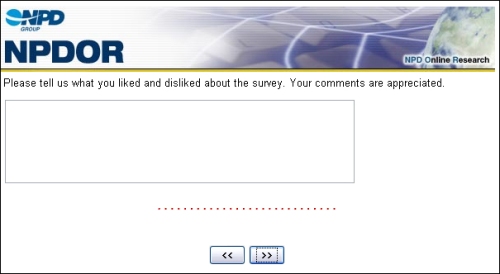

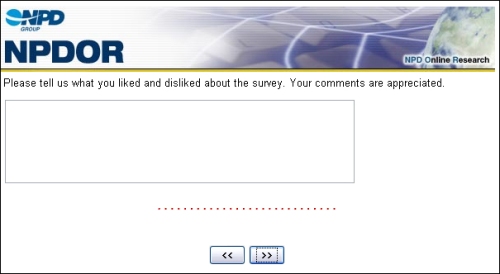

Here’s NPD’s similar approach:

Polling Point has asked, at various times, something along the same basic lines; I remember because there was something they kept getting wrong, and I kept complaining about it, to no avail. (It was something minor related to the answer choices they were giving about which news program you watched most often, but I can’t remember the details.)

All of these approaches are, on the one hand, commendable: respondents should be able to provide feedback, and asking directly in the research instrument itself lets the feedback go directly to the survey designers, as opposed to making those with issues go through the front office of the giant research company to try to make a complaint that will never be properly routed.

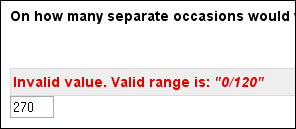

But here’s the other side of the coin:

Wouldn’t it be better to extensively test our research before it goes into the field?

Suggestion:

Bring in a consultant to short-term edit your questionnaires and long-term train your staff in writing better questions and designing better studies. Me, for instance.

Alternate suggestion:

Offer special incentives to especially active panelists who have proven themselves in past feedback to be critical of errors in exchange for them “previewing” certain surveys. You can probably get them to do a decent job of quality assurance testing/user acceptance testing/proofreading/whatever you want to call it for nothing other than an additional entry in the sweepstakes or an extra $2 in their prize money account. (Yes, itoccurs to me they could be doing this right now, just without the special incentives, and I’m doing their work for free.)

The thing is, finding errors and other usability problems in complicated market research instruments really isn’t the sort of thing you want to be doing in real-time on a live survey after people work their way through it. Whether you’re exposing the whole panel or just the selected subset of early reviewers to the unedited project, you’re still trying to do triage on something that should have been right before it launched.

Get it right the first time, guys.