Not a newsflash: I hate matrixes. That being said, I acknowledge they’re sometimes going to be necessary. If you’ve got to use one, though, I think it’s in everyone’s interest to keep each one as small as possible, and to use as few of them as possible.

There’s often a point in web surveys where the respondent is asked whether or not he has heard of a number of different items – brands of orange juice, for instance, to use my favorite example. That’s followed by another question asking which of the brands the respondent has personally tried.

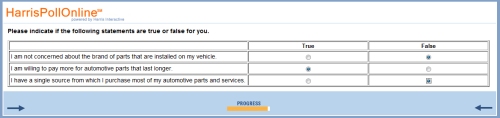

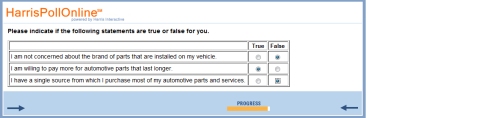

Then come the matrixes, where respondents are asked to rate each of the brands that they’ve heard of – not just the subset they’ve personally tried – across a number of rating criteria, each one likely being its own matrix on its own page. This is the point where the respondent suddenly regrets being so honest about the brands he’s seen in the grocery store or advertised on TV, because he suddenly realizes he’s going to be spending the next fifteen minutes of his life clicking “don’t know” or “not applicable” on matrix after matrix inquiring about the best flavor, the least pulp, the nicest packaging, and so on. I get, very clearly, that as researchers, this isn’t entirely a waste of time – we can give our clients a report that shows the attitudes crosstabbed by both active users and those who are just aware of each brand. It has the added “bonus” of letting us inflate the number of respondents — you get to tell your client that you asked the evaluation questions of significantly more people than you would have if you’d only included those who use the brands in question. (This is the product research version of asking unlikely voters how they’ll be voting.) And, of course, it’s possible that some respondents will have differing levels of familiarity with the products they don’t themselves use, and may actually be able to provide useful feedback nevertheless. But, still:

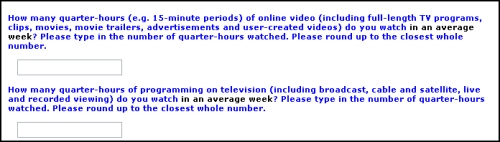

I’m writing this, actually, as I take a break from a piece of research I’m in the middle of taking. I think I’m on about the sixth matrix page. I’ve got 8 columns going across – 7 point Likert plus a “not sure” – and 10 rows of brands going down, only 1 of which is asking me about something I truly have knowledge of – the other 9 are things I’ve heard of, but have no ability to evaluate. I don’t want to go into specifics, but let’s pretend it’s about travel, and that it first asked me which foreign cities I’d ever considered traveling to, and then asked which ones I’d actually visited — and now it’s asking me about every city I’d considered going to, to rate the quality of its museums, central train station, hotels, safety, and so on. There might be the occasional question I can answer based on something a friend told me or based on something I vaguely remember reading on Wikipedia or in a Rough Guide, but in general, I’m just not able to comment on the friendliness of the Dublin populace, you know?

Not only is this frustrating, but I’m also (and this wouldn’t apply to an ordinary respondent) acutely aware that my speeding through page after page, clicking “not sure” for 9 of the 10 choices and then assigning an answer choice to the one thing I’m familiar with is probably going to result in my responses being discarded anyway.

I have a sense, based on the level of detail each matrix has gone into, that I’m going to have another 4 or 5 of these waiting for me, and honestly, I’m hoping I time out while I write this; if I do, I’m done.

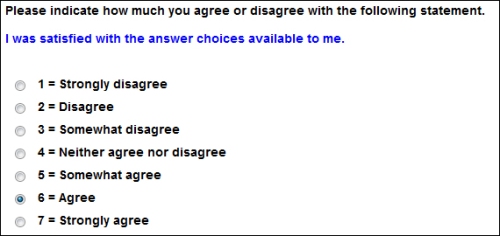

Is an aggravated respondent really in anyone’s best interest?