(edit, April 6, 2011: Over a year since I posted this, and I just took another Zogby poll (now an “Ibope Zogby poll,” by the way), and they’re still asking this question the same way. And I still, despite being pretty politically aware, knowing my congressman’s name, and having even written the guy and gotten a response on at least one occasion, have absolutely no idea what district number I live in. Everything below this parenthetical addition is old content, so if you have seen it before, sorry.)

This is from a couple of weeks ago, and I’m just now getting a chance to post it.

88% of Americans live in a state with fewer than 53 US congressional districts in it. Only California has that many; Texas comes in second with 32.

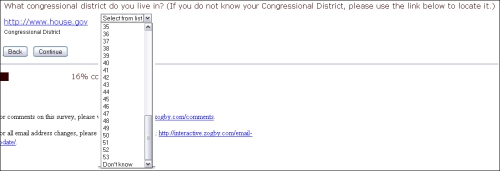

And yet, here’s how the good folks at Zogby Interactive ask what congressional district you live in:

That’s right. Zogby asks what state you live in, and then asks you, regardless of how many districts your state contains, which of 53 districts you live in. This is terrible for a lot of reasons, beginning with what should be obvious to everyone: it’s really lazy.

Looking at this from a practical political standpoint, though, it’s a mess. Folks just don’t think about their congressional district that way. Many (certainly not all) will know the name of their representative — or at least be able to pick the name from a short list of possibilities — but the odds of them knowing the actual district number aren’t great.

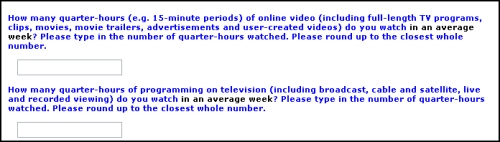

That being said: it can be problematic to ask people who their representative is if you’re then going to ask them if they’re going to vote for that person — it creates a priming effect and reminds (or informs, if the respondent is less focused on politics) of incumbency and makes it difficult to do a clean “would you vote for x or y” question. While I didn’t get that question as a follow-up, it’s possible some respondents did, though I somewhat doubt it this far out.

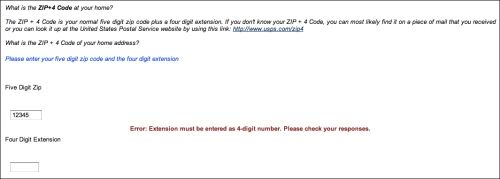

A much better way to ask this question is to ask for zip code, which will let you look up the right district in most cases; a simpler method (for the respondent), and one that might feel less personally intrusive, is to remember that this is the internet and present a state map, on which the respondent can zoom in and actually CLICK WHERE HE LIVES.

And, saying what should be obvious, but maybe isn’t: if you structure your research in such a way that only the very very very top-most super-engaged respondents are qualified to answer a follow-up, your results are only going to reflect that tiny slice of the population.

Pathetic, and sadly, about what one would expect.