Joel Spolsky runs a software company and writes a blog called Joel on Software, but I often find what he writes is applicable to business in general, and occasionally to the research world as well. Recently, in his Inc.com magazine column, he wrote about the problems that develop when too many people are involved in a decision making process — in this  particular case, he mentions how a former Microsoft developer tells how designing the Windows Vista shutdown menu took something like 43 people something like a full year and produced a menu with nine near-identical options. The developer calls it The Windows Shutdown Crapfest. The lesson there is obvious — too many cooks spoil the soup — and relevant, I think, to our work, with plain-as-day solutions — trim your meeting invite list and get extra hands out of the work — but dig a little deeper for a more important lesson.

particular case, he mentions how a former Microsoft developer tells how designing the Windows Vista shutdown menu took something like 43 people something like a full year and produced a menu with nine near-identical options. The developer calls it The Windows Shutdown Crapfest. The lesson there is obvious — too many cooks spoil the soup — and relevant, I think, to our work, with plain-as-day solutions — trim your meeting invite list and get extra hands out of the work — but dig a little deeper for a more important lesson.

Each of those links in the above paragraph is worth perusing, but the most worthwhile link I’ll have for you today is this, Joel’s original 2006 post on this topic, which does a great job of explaining the resulting mess in terms we all should be able to understand:

The fact that you have to choose between nine different ways of turning off your computer every time just on the start menu, not to mention the choice of hitting the physical on/off button or closing the laptop lid, produces just a little bit of unhappiness every time.

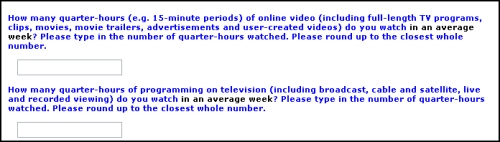

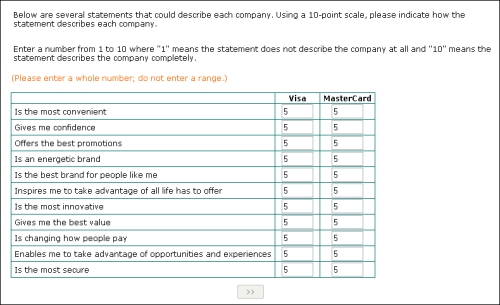

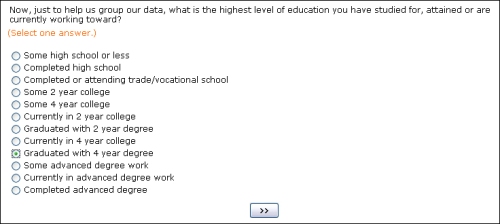

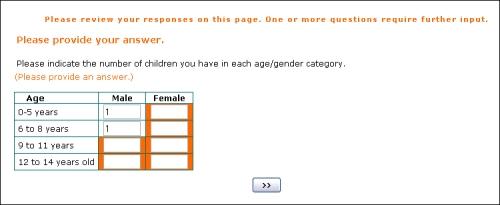

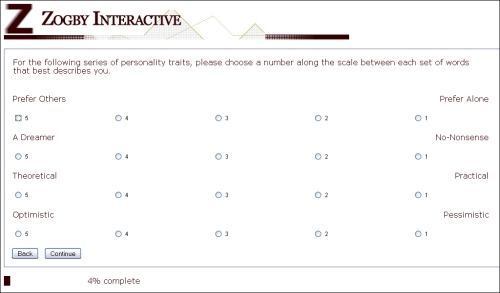

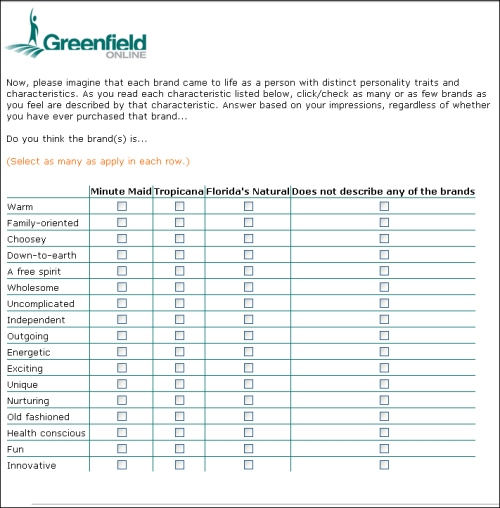

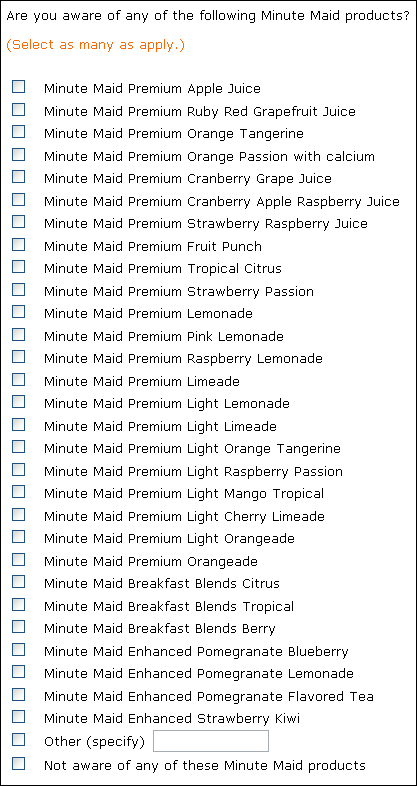

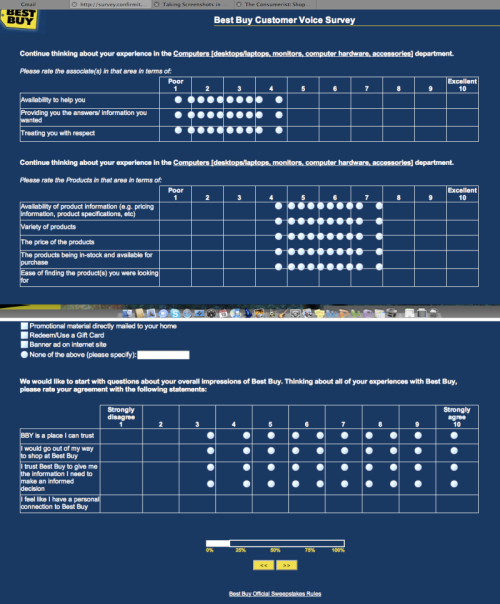

How do we expect our respondents feel when we ask them to tell us if they are Extremely Satisfied, Very Satisfied, Somewhat Satisfied, Neither Satisfied Nor Satisfied, Somewhat Unsatisfied, Very Unsatisfied, or Extremely Unsatisfied with something? What about when we ask them that about a whole page worth of somethings? And what about when some percentage of the questions — anywhere from 1/8 to 1/4 in my rough estimate — don’t apply to the respondent at all? I’d argue we create more than “just a little bit of unhappiness every time.”

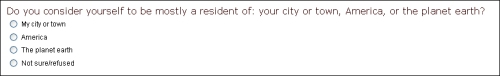

The lesson is the same as it so often is here: keep it simple. Satisfied/Unsatisfied/Not Sure should be perfectly sufficient in mot cases, and has the advantage of making the results much more comprehensible at a glance. When comparing results across multiple questions, or across a wide time line of tracking data, it’s infinitely easier to comprehend a single number. The presidential approval number is generally reported as a single figure: 55% in this poll, 48% in this other poll, 53% a month ago, 58% today, etc. Instantly understandable by everyone, as opposed to something like this:

Today, 23% strongly approve of the President’s job performance; 27% say they somewhat approve. Two weeks ago, 29% strongly approved; 17% somewhat approved.

How do you parse that? Strong approval is up down 6 points at the same time that mild approval is up 10 points; overall, if you add the “strong” and “somewhat” numbers together, you can see that overall approval is up four points, but what do you do with those shifts in the gradated responses? Well, if you’re the nightly news — and I’m not suggesting that’s who we should be emulating, necessarily — but if you’re the nightly news, you ignore it and report it as a four point climb. (Well, depending on your viewpoint, you might say the nightly news would be most likely to point out the six point drop in “one measure of the President’s approval rating” and leave it at that, and I don’t think you’d necessarily be wrong about that observation, so.) If you’d only asked the question as approve/disapprove, though, you’d give respondents a simpler experience, and you’d give those interpreting the results both an easier time of it and less wiggle room for those with an agenda.

Let’s see what happens if we offer fewer choices. You really don’t need nine ways to turn off the computer, or seven ways to tell us how satisfied or unsatisfied you are. Honest.