A couple of thought’s on PPP’s bombshell last week about interracial marriage in Mississippi, which, if you missed it, said that 46% of “usual Republican Primary voters” there say interracial marriage should be against the law (full PDF of results here):

First, at the very outset, this: I like PPP quite a lot. They do solid work. They release more data on their polls than many of teir competitors. They are a Democratic pollster, but if you look at the data Nate Silver puts together for FiveThirtyEight, PPP’s results are both pretty accurate and pretty unbiased. Yes, one can argue that being partisan gives them an agenda, and while I’m sure they’re not sad to have uncovered this data point, at the end of the day, a pollster is only as good as his numbers, and PPP’s numbers are good. If anything, I’d say their partisan leaning gives them some “cover” when they want to ask something controversial, like they did here. Actual question wording: “Do you think interracial marriage should be legal or illegal?” I think a good number of pollsters would have difficulty asking that question; it seems, to me at least, to be too likely to cause offense. More on that in a minute, though.

Needless to say, the fact that 46% of their respondents said “illegal” made a lot of news last week. PPP says they asked the question of Democrats (and I assume independents) as well, and will be releasing that part of the data shortly, and I’m sure that will make some news, too. I’m not naive enough to believe that racism is dead, and it’s worth noting that while the US Supreme Court struck down state laws banning interracial marriages back in 1967, neighboring Alabama didn’t get around to removing the anti-miscegenation bit from its state constitution until the year 2000, but I still think these numbers are surprisingly high. These are findings that produce coverage because people talk about them; in this case, a lot of sad head-shaking mixed with gleeful potshots from one side, and a lot of unoriginal pollster attacks from the other, mostly of the usual tired “but it’s IVR, it could have been an 8-year-old taking the poll” sort. (Because it would be a better or easier-to-stomach finding if 46% of Mississippi 8-year-olds felt this way?)

Still, I think there are about three perfectly reasonable reasons to question these results, so let’s get to it:

First, there’s this: “legal” and “illegal” sound really similar on the phone, no matter how carefully the announcer (or the live interviewer) says the words. Everyone does it, because workaround wording is cumbersome and ends up being something like “should so-and-so be allowed by law? Or against the law,” which is problematic because people generally just don’t say “allowed by law,” so you end up causing some confusion there, as well. Instead, it might be better, somewhat illogically, to go with something even longer, like “should Mississippi allow interracial couples to get married? Or should interracial marriage be against the law?” It’s impossible to mis-hear that, except for the next thing:

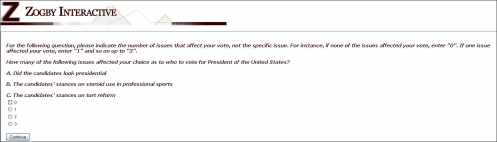

There isn’t (or, there wasn’t until this poll was released) any real debate in this country right now about interracial marriage. There is, however, one about same-sex marriage. I think it’s very possible that some percentage of respondents simply misunderstood the question. This was raised, to an extent, in the comments on the original PPP post on this poll, and I think PPP’s response might have been a little too hasty, though I understand where they’re coming from. To be very, very clear: I do not think the respondents on this poll, or southerners, or Republicans, or southern Republicans, or any people in general, are stupid. I do not think people are hearing the word “interracial” and failing to understand what it means. I think they’re just not hearing the word at all. Look: people are not taking polls in ideal lab conditions; they’re taking them in real life, in rooms that contain television sets, and children, and the internet, and in some cases they’re taking the polls in their cars on their cell phones, despite the pollster’s best intentions to not accidentally break the law by calling any wireless numbers. No matter how clearly worded and how well-read the question is, the pollster is battling an infinite number of distractions for each respondent’s time, and can’t expect each respondent to be giving them his or her undivided attention. In 2011, in an election poll, when the 14th question you’re asked, coming immediately after several “how would you vote” questions, if you hear “Do you think interracial marriage should be legal or illegal,” I think you’re going to have one of three reactions, and only one of them is good.

You might hear the question correctly and answer it. Spoiler alert: that’s the good one.

You might hear the question incorrectly and answer it. Another spoiler: that’s bad.

You might get so offended that you hang up the phone. This is so bad that it’s the topic of the next paragraph.

If you ask a question that deeply offends some of your respondents, they’re going to hang up on you. That’s going to leave only the non-offended to answer that question. What does that do to your results? If you, out of the blue, put a question in the middle of your Mississippi Republican primary election poll, a question that carries the pretty clear subtext of “are you a racist,” I think there’s a reasonable chance that many non-racist respondents will decide they know where this is headed — to a bad place — and drop out of the poll. I actually think a similar thing may be driving some of the polls showing large numbers of Republicans in the “birther” camp — a lot of non-birthers are hearing that question as “Now I’d like to ask you something to try to make people who share some of your views on politics look really, really stupid. Where do you think Barack Obama was born?” Reasonable Republicans may be dropping out at this point, which not only is inflating the number of Republican birthers, but it’s also having the side effect of inflating the poll numbers for “candidates” like Donald Trump, because, in most cases, if you hang up during the course of a poll, none of your previous answers count. If Romney voters start dropping out of a poll near the tail end, their answers up at the top end of the poll are also going to go in the garbage, and that’s going to give a bump to the fringe respondents’ favorite candidates.

All this is testable, of course, with some a/b sample splitting. I think the hardest thing is making it absolutely clear we’re talking about interracial marriage (as opposed to same-sex marriage), which might require, ironically enough, asking about same-sex marriage first to make it more abundantly clear, in the subsequent interracial marriage questions, that we’re now talking about something else.

I look forward to seeing what data PPP shows for the rest of Mississippi respondents from this poll; offhand I’d expect it to be somewhat less of a large number, but still larger than many will be comfortable with — but I’d take it all with a grain of salt until someone is able to do some testing of my hypothesis here.